If you have worked in the Kubernetes environment, the chances are high that you have heard about ETCD Database. ETCD is typically used in distributed systems as a database; the most famous example is Kubernetes. So typically, you will find ETCD present in the controller nodes of the Kubernetes Cluster. For high Availability configurations,

- ETCD is run on an odd number of nodes.

- ETCD is run as a process on controller nodes and not as a POD.

The official definition:

A distributed, reliable key-value store for the most critical data of a distributed system

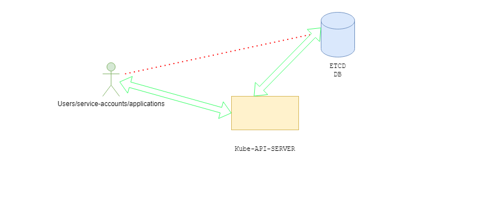

When someone runs kubectl to query the status of the Kubernetes objects, the API server will query the status of that object in the ETCD database. Users typically do not interact directly with the ETCD database, and they query the API server via kubectl or the REST API. This means the primary consumer of the ETCD database in the typical scenario is API-server.

What if you want to see what is inside the ETCD Database directly?

As kubectl is the tool to interact with the Kube-API server, “etcdctl” is the client to query the ETCD database.

Step-1: Install etcdctl, also known as etcd-client

sudo apt-get install etcd-client

Step-2: Check the IP Address of the node with etcd is running(typically controller node)

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kube-master Ready control-plane,master 7d7h v1.23.3 192.168.1.141 <none> Ubuntu 20.04.3 LTS 5.4.0-107-generic containerd://1.5.9

kube-worker-1 Ready <none> 7d7h v1.23.3 192.168.1.142 <none> Ubuntu 20.04.3 LTS 5.4.0-107-generic containerd://1.5.9

kube-worker-2 Ready <none> 7d7h v1.23.3 192.168.1.144 <none> Ubuntu 20.04.3 LTS 5.4.0-107-generic containerd://1.5.9

kube-worker-3 Ready <none> 7d6h v1.23.3 192.168.1.147 <none> Ubuntu 20.04.3 LTS 5.4.0-107-generic containerd://1.5.9

Step-3: Grab the Client certificate and key details of the API-server

Note that, the file path printed below is from the node where Kube-API-server runs. So, either you will need to copy these files for the next steps of SSH to a node where Kube-API-server runs.

kubectl get pod -n kube-system kube-apiserver-kube-master -o yaml |grep -i etcd

E.g.:

kubectl get pod -n kube-system -l component=kube-apiserver -o yaml |grep -i etcd

- --etcd-cafile=/etc/ssl/etcd/ssl/ca.pem

- --etcd-certfile=/etc/ssl/etcd/ssl/node-kube-master.pem

- --etcd-keyfile=/etc/ssl/etcd/ssl/node-kube-master-key.pem

- --etcd-servers=https://192.168.1.141:2379

- --storage-backend=etcd3

- mountPath: /etc/ssl/etcd/ssl

name: etcd-certs-0

path: /etc/ssl/etcd/ssl

name: etcd-certs-0

Step-4: Use etcdctl to query ETCD

Note that to read the files in /etc/ssl you will need sudo permissions. As mentioned above, these files(in colors) are present in the Master node, so I SSH to the master node, you can scp the files to a local node if you want.

ETCDCTL_API=3 etcdctl --endpoints <ETCD-SERVER-IP:PORT> --cert=<CLIENT-CERT-FROM-ABOVE-OUTPUT> --key=<CLIENT-KEY-FROM-ABOVE-OUTPUT> --cacert=<CA-CERT-FROM-ABOVE-OUTPUT> <ETCD-SUBCOMMANDS-HERE>

Eg:

sudo ETCDCTL_API=3 etcdctl --endpoints https://192.168.1.141:2379 --cert=/etc/ssl/etcd/ssl/node-kube-master.pem --key=/etc/ssl/etcd/ssl/node-kube-master-key.pem --cacert=/etc/ssl/etcd/ssl/ca.pem member list

d5f2ec633c3431c2d, started, etcd1, https://192.168.1.141:2380, https://192.168.1.141:2379, false

Step-5: Checking the structure of ETCD keys

For Kubernetes, everything is stored under the “/registry” path. Here is an example to list all the keys inside the /registry path.

sudo ETCDCTL_API=3 etcdctl --endpoints https://192.168.1.141:2379 --cert=/etc/ssl/etcd/ssl/node-kube-master.pem --key=/etc/ssl/etcd/ssl/node-kube-master-key.pem --cacert=/etc/ssl/etcd/ssl/ca.pem get /registry/ --prefix --keys-onlyExample-1:

The above output would generate the keys present under /the registry path. In my small 4-node cluster, it produced 440 lines of output.

/registry/apiregistration.k8s.io/apiservices/v1.

/registry/apiregistration.k8s.io/apiservices/v1.admissionregistration.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.apiextensions.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.apps

/registry/apiregistration.k8s.io/apiservices/v1.authentication.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.autoscaling

/registry/apiregistration.k8s.io/apiservices/v1.batch

/registry/apiregistration.k8s.io/apiservices/v1.certificates.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.coordination.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.discovery.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.events.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.networking.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.node.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.policy

/registry/apiregistration.k8s.io/apiservices/v1.rbac.authorization.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.scheduling.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1.storage.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.batch

/registry/apiregistration.k8s.io/apiservices/v1beta1.discovery.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.events.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.flowcontrol.apiserver.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.node.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta1.policy

/registry/apiregistration.k8s.io/apiservices/v1beta1.storage.k8s.io

/registry/apiregistration.k8s.io/apiservices/v1beta2.flowcontrol.apiserver.k8s.io

/registry/apiregistration.k8s.io/apiservices/v2.autoscaling

/registry/apiregistration.k8s.io/apiservices/v2beta1.autoscaling

/registry/apiregistration.k8s.io/apiservices/v2beta2.autoscaling

/registry/clusterrolebindings/cluster-admin

/registry/clusterrolebindings/fluentbit-fluent-bit

/registry/clusterrolebindings/kubeadm:get-nodes

/registry/clusterrolebindings/kubeadm:kubelet-bootstrap

Example-2: Querying the list of pods in the default namespace

sudo ETCDCTL_API=3 etcdctl --endpoints https://192.168.1.141:2379 --cert=/etc/ssl/etcd/ssl/node-kube-master.pem --key=/etc/ssl/etcd/ssl/node-kube-master-key.pem --cacert=/etc/ssl/etcd/ssl/ca.pem get /registry/ --prefix --keys-only |grep pods/default

/registry/pods/default/my-pod

/registry/pods/default/my-pod-1

/registry/pods/default/simple-httpd

Example-3: Querying the list of services in the kube-system namespace

sudo ETCDCTL_API=3 etcdctl --endpoints https://192.168.1.141:2379 --cert=/etc/ssl/etcd/ssl/node-kube-master.pem --key=/etc/ssl/etcd/ssl/node-kube-master-key.pem --cacert=/etc/ssl/etcd/ssl/ca.pem get /registry/ --prefix --keys-only |grep services/specs/kube-system

/registry/services/specs/kube-system/coredns

Step-5: Checking the values of the keys(Potential Security threat)

Until now we have seen how to list the keys by name, in this step we will check the status of a pod. Here we are printing about a pod called “my-pod” in the default namespace. You may have noticed that raw output of etc content can be dumped if a bad actor impersonates an API server in front of the ETCD server. This is possible if the API-server certs/keys are left insecure.

sudo ETCDCTL_API=3 etcdctl --endpoints https://192.168.1.141:2379 --cert=/etc/ssl/etcd/ssl/node-kube-master.pem --key=/etc/ssl/etcd/ssl/node-kube-master-key.pem --cacert=/etc/ssl/etcd/ssl/ca.pem get /registry/pods/default/my-pod

/registry/pods/default/my-pod

k8s

v1Pod▒

▒

my-poddefault"*$561edfcad-a8f8-413f-a2d6-aea443c20d4a2▒▒▒z▒▒

kubectl-replaceUpdatev▒▒▒FieldsV1:▒

▒{"f:spec":{"f:containers":{"k:{\"name\":\"my-container\"}":{".":{},"f:command":{},"f:image":{},"f:imagePullPolicy":{},"f:name":{},"f:resources":{},"f:terminationMessagePath":{},"f:terminationMessagePolicy":{},"f:volumeMounts":{".":{},"k:{\"mountPath\":\"/bin/entrypoint.sh\"}":{".":{},"f:mountPath":{},"f:name":{},"f:readOnly":{},"f:subPath":{}}}}},"f:dnsPolicy":{},"f:enableServiceLinks":{},"f:restartPolicy":{},"f:schedulerName":{},"f:securityContext":{},"f:terminationGracePeriodSeconds":{},"f:volumes":{".":{},"k:{\"name\":\"configmap-volume\"}":{".":{},"f:configMap":{".":{},"f:defaultMode":{},"f:name":{}},"f:name":{}}}}}B▒▒

Go-http-clientUpdatev▒㶒FieldsV1:▒

▒{"f:status":{"f:conditions":{"k:{\"type\":\"ContainersReady\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}},"k:{\"type\":\"Initialized\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}},"k:{\"type\":\"Ready\"}":{".":{},"f:lastProbeTime":{},"f:lastTransitionTime":{},"f:status":{},"f:type":{}}},"f:containerStatuses":{},"f:hostIP":{},"f:phase":{},"f:podIP":{},"f:podIPs":{".":{},"k:{\"ip\":\"10.233.72.4\"}":{".":{},"f:ip":{}}},"f:startTime":{}}}Bstatus▒

*

configmap-volume▒

my-configmap▒

▒

kube-api-access-tzzw2k▒h

"

▒token

(&

kube-root-ca.crt

ca.crtca.crt

)'

%

namespace

v1metadata.namespace▒▒

my-containerubuntu/bin/entrypoint.sh*BJ9

entrypoint.sh2JL/bin/entrypoint.sh"

kube-api-access-tzzw2-/var/run/secrets/kubernetes.io/serviceaccount"2j/dev/termination-logrAlways▒▒▒▒FileAlways 2

kube-worker-3X`hr▒▒▒default-scheduler▒6

node.kubernetes.io/not-readyExists" NoExecute(▒▒8

node.kubernetes.io/unreachableExists" NoExecute(▒▒▒▒▒PreemptLowerPriority▒

Running#

InitializedTru▒▒▒*2

ReadyTru▒㶒*2'

ContainersReadyTru▒㶒*2$

192.168.1.1472u▒▒▒*2"*

10.233.72.▒▒▒B▒

my-container

▒㶒u▒Unknown▒▒▒ⶒ:Mcontainerd://21f0ae2c94ca86b1b814af9992e70317f05599785ffbc1170e7abf66f5137429 (2docker.io/library/ubuntu:latest:`docker.io/library/ubuntu@sha256:91adsasda875cee98b016668342c489ff0674f247f6ca20dfc91b91c0f28581aeBMcontainerd://9469fe33ab30asd8bc37cd1e1ea69befae2a0ddd9eeeb12d3c1c30c28c4f8HJ

BestEffortZb

10.233.72.4"

Another example to demonstrate why keeping API-SERVER identity(certs/keys) is vital for security. Let’s create a secret called “foo-bar” in the default namespace:

kubectl create secret generic foo-bar --from-literal mypass=verysafepassword

secret/foo-bar created

Now, reading it using etcdctl, you may notice that the password is visible.

sudo ETCDCTL_API=3 etcdctl --endpoints https://192.168.1.141:2379 --cert=/etc/ssl/etcd/ssl/node-kube-master.pem --key=/etc/ssl/etcd/ssl/node-kube-master-key.pem --cacert=/etc/ssl/etcd/ssl/ca.pem get /registry/secrets/default/foo-bar

/registry/secrets/default/foo-bar

k8s

v1Secret▒

▒

foo-bardefault"*$c6a919d5-9838-44dc-8d96-67000be42d0a2▒▒▒▒z▒c

kubectl-createUpdatev▒▒▒▒FieldsV1:/

-{"f:data":{".":{},"f:mypass":{}},"f:type":{}}B

mypassverysafepasswordOpaque"

Important Note: This post is to help visualize the database and how things work internally. Typically the ETCD databases are encrypted, and this way of accessing the DB may not really expose anything. Although, if the API-server’s cert/keys are leaked, and sudo access to the master node is leaked, then it is a way bigger concern.

I’m really enjoying the design and layout of your website.

It’s a very easy on the eyes which makes it much more pleasant for

me to come here and visit more often. Did you hire out a designer to create your theme?

Great work!