Adding a Node to Kubernetes Cluster using Kubespray

Kubespray is a great tool to create a production-ready Kubernetes cluster. However, Kubespray is not limited to spinning the cluster, and you can do many other cluster management operations using kubespray. E.g., scaling the cluster, upgrading the cluster, etc. In this post, we will see an example where we will scale up an existing Kubernetes cluster. The existing cluster is consist of 1 master node and 1 worker node.

Following is the node status before performing scaling using kubespray.

ps@controller:~$ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kube-master Ready control-plane,master 11h v1.23.3 192.168.1.129 <none> Ubuntu 20.04.3 LTS 5.4.0-99-generic containerd://1.5.9

kube-worker Ready <none> 11h v1.23.3 192.168.1.130 <none> Ubuntu 20.04.3 LTS 5.4.0-99-generic containerd://1.5.9

In the following procedure, we assume that the initial cluster is created using Kubespray. Following is the procedure to add a new node to the cluster.

Step-1: Identify the IP address of the new node.

The node I plan to add has an IP address of 192.168.1.131, and the node’s name is kube-worker-2.

Step-2: Add the entries for the new node in the existing inventory file.

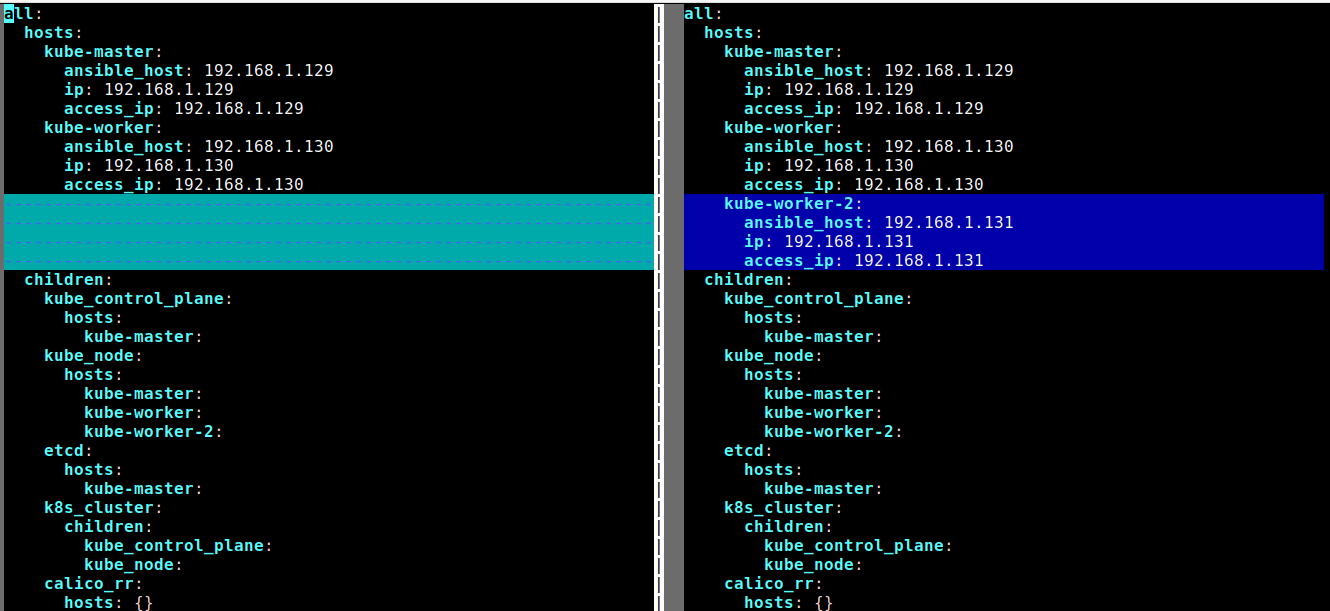

Following is a side-by-side comparison showing the addition of a new host in the inventory file. The left side shows old inventory, and the right side shows the updated inventory. I have named the new node “kube-worker-2,” whose IP is 192.168.1.131.

Step-3: Refresh the facts of all the hosts

Run the following command to collect the facts of all the hosts.

ansible-playbook -i inventory/mycluster/hosts.yaml facts.yml -u <ssh-username>Step-4: Finally, add the node to the cluster

Run the following command by supplying the new node name as input.

ansible-playbook -i inventory/mycluster/hosts.yaml cluster.yml -u <ssh-username> -b -l <new-node-name>

Note: Here, cluster.yml playbook is used, not scale.yml. From the name of the playbooks, it seems scale.yml is appropriate to use. However, do not use scale.yml to add nodes. Scale.yml is used to barely ready the node for auto-scaling. scale.yml would install stuff needed for a Kubernetes worker node but not join the cluster.

Here is the node status of the cluster., if the node status is not ready, reboot that node by running “sudo reboot” inside that node.

kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kube-master Ready control-plane,master 12h v1.23.3 192.168.1.129 Ubuntu 20.04.3 LTS 5.4.0-99-generic containerd://1.5.9

kube-worker Ready 12h v1.23.3 192.168.1.130 Ubuntu 20.04.3 LTS 5.4.0-99-generic containerd://1.5.9

kube-worker-2 Ready 12m v1.23.3 192.168.1.131 Ubuntu 20.04.3 LTS 5.4.0-99-generic containerd://1.5.9

Removing the node from the cluster:

Step-1: Run the following command to remove the node from the cluster. Note the playbook name here is remove-node.yml

ansible-playbook -i inventory/mycluster/hosts.yaml remove-node.yml -b -u <ssh-username> -e node=<node-for-removal> -e reset_nodes=false -e allow_ungraceful_removal=true

Step-2: Remove that host info from the inventory file.

Note: After removing the node from the cluster, I tried to add it back; however, it was not listed in the “kubectl get node” command output. I rebooted all the nodes, and the new node got listed back.